Predictive models that use data from individuals are an important source of information in medical settings. Predictive modelling, or the use of electronic algorithms to forecast future events, makes it possible to harness the power of big data to improve people’s health and reduce the cost of healthcare. However, this opportunity can raise policy, ethical, and legal questions.

An important area for the Prediction Modelling Group to explore is the involvement of service users, carers and other stakeholder in discussions about ethical issues and to develop guidelines for the development and use of prediction models in mental health.

Blog: What can humans do to guarantee an ethical AI in healthcare?

Dr Raquel Iniesta explores the current status of AI in healthcare in a two part blog. She looks at what we need to consider to ensure its application is ethical and current approaches that are helping this happen.

- Part I focuses on the reasons why we need an ethical framework to enable AI to work for healthcare.

- Part II focuses on what is being put in place to help enable our AI in healthcare to be ethical.

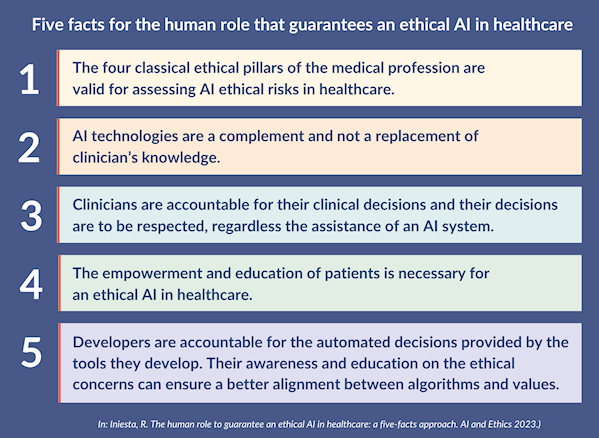

As part of Dr Iniesta’s NIHR Maudsley BRC funded work she has published a paper in the journal AI and Ethics which describes five facts that can help guarantee an ethical AI in healthcare. By providing this simple, evidence based explanation of ethical AI and who needs to be accountable she hopes to help provide guidance on the human action that ensures an ethical implementation of AI in healthcare.