CRIS Natural Language Processing

CRIS NLP Applications Catalogue

Natural Language Processing (NLP) is a type of Artificial Intelligence, or AI, for extracting structured information from the free text of electronic health records. We have set up the Clinical Record Interactive Search (CRIS) NLP Service to facilitate the extraction of anonymised information from the free text of the clinical record at the South London and Maudsley NHS Foundation Trust. Research using data from electronic health records (EHRs) is rapidly increasing and most of the valuable information is sought in the free text. However, manually reviewing the free text is very time consuming. To overcome the burden of manual work and extract the information needed, NLP methods are utilised and in high demand throughout the research world.

CRIS Natural Language Processing

Welcome to the NLP Applications Catalogue. This provides detailed information about various apps developed within the CRIS service.

The webpage published and regularly updated here contains the details and performance of over 80 NLP applications relating to the automatic extraction of mental health data from the EHR that we have developed and currently routinely deployed through our NLP service.

This webpage provides details of NLP resources which have been developed since around 2009 for use at the NIHR Maudsley Biomedical Research Centre and its mental healthcare data platform, CRIS. We have set up the CRIS NLP Service to facilitate the extraction of anonymised information from the free text of the clinical record. Research using data from electronic health records (EHRs) is rapidly increasing and the most valuable information is sometimes only contained in the free text. This is particularly the case in mental healthcare, although not limited to that sector.

The CRIS system was developed for use within NIHR Maudsley BRC. It provides authorised researchers with regulated, secure access to anonymised information extracted from South London and Maudsley’s EHR.

The South London and Maudsley provides mental healthcare to a defined geographic catchment of four south London boroughs (Croydon, Lambeth, Lewisham, Southwark) with around 1.3 million residents, in addition to a range of national specialist services.

General Points of Use

All applications currently in production at the CRIS NLP Service are described here.

Our aim is to update this webpage at least twice yearly so please check you are using the version that pertains to the data extraction you are using.

Guidance for use: Every application report comprises four parts:

1) Description– the name of application and short explanation of what construct(s) the application seeks to capture.

2) Definition - an account of how the application was developed (e.g. machine-learning/rule-based, the terms searched for and guidelines for annotators), annotation classes produced and interrater reliability results (Cohen’s Kappa).

3) Performance – precision and recall are used to evaluate application performance in pre-annotated documents identified by the app as well as un-annotated documents retrieved by keyword searching the free text of the events and correspondence sections of CRIS. a) Precision is the ratio of the number of relevant (true positive) entities retrieved to the total number of entities (irrelevant -false positive- and relevant -true positive)) retrieved. b) Recall is the ratio of the number of relevant (true positive) entities retrieved to the number of relevant (true positive and false negative) entities available in the database. Performance testing is outlined in chronological order for either pre-annotated documents, unannotated documents retrieved through specific keyword searches or both. The latest performance testing on the list corresponds to results produced by the version of the application currently in use by the NLP Service. Search terms used for recall testing are presented, where necessary. Similarly, details are provided for any post-processing rules that have been implemented. Notes relating to observations by annotators and performance testers are described, where applicable.

4) Production – information is provided on the version of the application currently in use by the NLP Service and the corresponding deployment schedule.

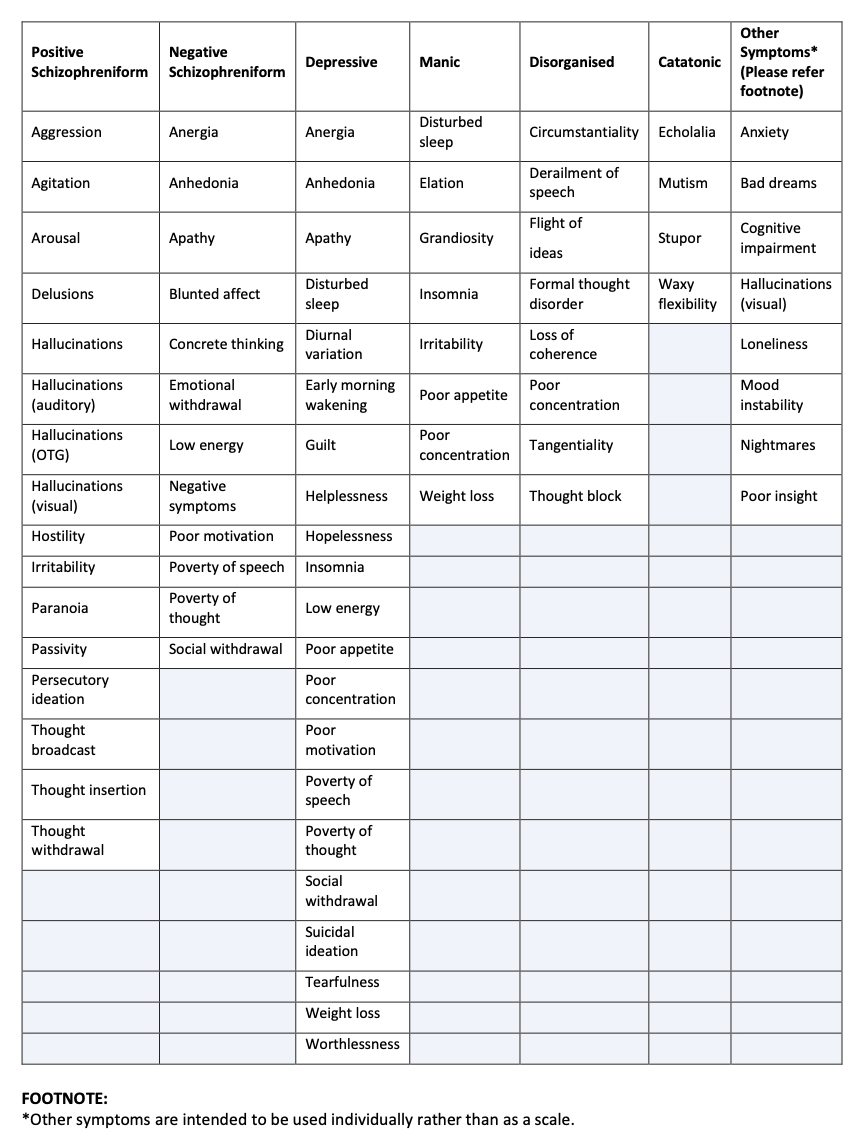

Symptom scales (see proposed allocations)

As the number of symptom applications is increasing, we regularly evaluate how to make these available to researchers in a flexible and meaningful manner.

To this end, and in order to reduce the risk of too many and/or highly correlated variables in analyses, we are currently utilising symptom scales that group positive schizophreniform, negative schizophreniform, depressive, manic, disorganized and catatonic symptoms respectively.

The group of ‘other’ symptoms represent symptoms that have been developed separately for different purposes and that are intended to be used individually rather than in scales.

Each symptom receives a score of 1 if it’s scored as positive within a given surveillance period.

Individual symptoms are then summed to generate a total score of:

• 0 – 16 for positive schizophreniform

• 0 – 12 for negative schizophreniform

• 0 – 21 for depressive

• 0 – 8 for manic

• 0 – 8 for disorganized

• 0 – 4 for catatonic

We are encouraging researchers, unless there is a particular reason to be discussed with the NLP team, to use the scales for extracting and analysing data relating to symptom applications.

Version

V3.3

Contents

Symptoms

AggressionAgitation

Anergia

Anhedonia

Anosmia

Anxiety

Apathy

Arousal

Bad Dreams

Blunted Affect

Circumstantiality

Cognitive Impairment

Concrete Thinking

Delusions

Derailment

Disturbed Sleep

Diurnal Variation

Drowsiness

Early Morning wakening

Echolalia

Elation

Emotional Withdrawal

Eye Contact (Catergorisation)

Fatigue

Flight of Ideas

Fluctuation

Formal Thoughts Disorder

Grandiosity

Guilt

Hallucinations (All)

Hallucinations - Auditory

Hallucinations - Olfactory Tactile Gustatory (OTG)

Hallucinations - Visual

Helplessness

Hopelessness

Hostility

Insomnia

Irritability

Loss of Coherence

Low energy

Mood instability

Mutism

Negative Symptoms

Nightmares

Obsessive Compulsive Symptoms

Paranoia

Passivity

Persecutory Ideation

Poor Appetite

Poor Concentration

Poor Eye Contact

Poor Insight

Poor Motivation

Poverty Of Speech

Poverty Of Thought

Psychomotor Activity (Catergorisation)

Self Injurious Action

Smell

Social Withdrawal

Stupor

Suicidal Ideation

Tangentiality

Taste

Tearfulness

Thought Block

Thought Broadcast

Thought Insertion

Thought Withdrawal

Waxy Flexibility

Weight Loss

Worthlessness

Physical Health Conditions

AsthmaBronchitis

Cough

Crohn's Disease

Falls

Fever

Hypertension

Multimorbidity - 21 Long Term Conditions (Medcat)

Pain

Rheumatoid Arthritis

HIV

HIV Treatment

Contextual Factors

AmphetamineCannabis

Chronic Alcohol Abuse

Cocaine or Crack Cocaine

MDMA

Smoking

Education

Occupation

Lives Alone

Loneliness

Violence

Interventions

CAMHS - Creative TherapyCAMHS - Dialectical Behaviour Therapy (DBT)

CAMHS - Psychotherapy/Psychosocial Intervention

Cognitive Behavioural Therapy (CBT)

Depot Medication

Family Intervention

Medication

Social Care - Care Package

Social Care - Home Care

Social Care - Meals on Wheels

Outcome and Clinical Status

Blood Pressure (BP)Body Mass Index (BMI)

Brain MRI report volumetric Assessment for dementia

Cholesterol

EGFR

HBA1C

Lithium

Mini-Mental State Examination (MMSE)

Neutrophils

Non-Adherence

Diagnosis

Treatment- Resistant Depression

Bradykinesia (Dementia)

Trajectory

Tremor (Dementia)

QT

White Cells

Miscellaneous

Family ContactForms

Quoted Speech

Symptom Scales (see notes)

Symptoms

Aggression

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of aggressive behaviour in patients, including verbal, physical and sexual aggression.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“reported to be quite aggressive towards…”,

“violence and aggression, requires continued management and continues to reduce in terms of incidents etc”.

Examples of negative / irrelevant mentions (not included in the output):

“no aggression”,

“no evidence of aggression”

“aggression won’t be tolerated”.

Search term(case insensitive): *aggress*

Evaluated Performance

Cohen's k = 85% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 91%

Recall (sensitivity / coverage) = 75%

Additional Notes

Run schedule– Monthly

Other Specifications

Version 1.0, Last updated: xx

DOI

Agitation

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of agitation

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Very agitated at present, he was agitated”,

“He was initially calm but then became agitated and started staring and pointing at me towards”,

“Should also include no longer agitated. “

Examples of negative / irrelevant mentions (not included in the output):

“He did not seem distracted or agitated”,

“Not agitated”,

“No evidence of agitation”,

“A common symptom of psychomotor agitation”.

Search term(case insensitive): *agitat*

Evaluated Performance

Cohen's k = 85% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 85%

Recall (sensitivity / coverage) = 79%

Patient level testing done on all patients with primary diagnosis code F32* or F33* (testing done on 30 random documents):

Precision (specificity / accuracy) = 82%

Additional Notes

Run schedule– Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Anergia

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of anergia

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“feelings of anergia…”

Examples of negative / irrelevant mentions (not included in the output):

“no anergia”,

“no evidence of anergia”,

“no feeling of anergia”.

Search term(case insensitive): *anergia*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 95%

Recall (sensitivity / coverage) = 89%

Patient level testing done on all patients with primary diagnosis code F32* or F33* (testing done on 30 random documents):

Precision (specificity / accuracy) = 93%

Additional Notes

Run schedule– Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Anhedonia

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of anhedonia (inability to experience pleasure from activities usually found enjoyable).

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“ X had been anhedonic”,

“ X has anhedonia”.

Examples of negative / irrelevant mentions (not included in the output):

“no anhedonia”,

“not anhedonic”,

Used in a list, not applying to patient (e.g. typical symptoms include …);

Uncertain (might have anhedonia, ?anhedonia, possible anhedonia);

Search term(s): *anhedon*

Evaluated Performance

Cohen's k = 85% (testing done on 50 random documents).

Instance level, (testing done on 100 random documents):

Precision (specificity / accuracy) = 93%

Recall (sensitivity / coverage) = 86%

Patient level testing done on all patients with primary diagnosis code F32* or F33* (testing done on 30 random documents):

Precision (specificity / accuracy) = 87%

Additional Notes

Run schedule– Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Anosmia

Return to contentsCRIS NLP Service

Brief Description

Application to extract and classify mentions related to anosmia.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Loss of enjoyment of food due to anosmia”,

“COVID symptoms such as anosmia”

Examples of negative / irrelevant mentions (not included in the output):

“Nil anosmia”,

“Anosmia related to people other than the patient”,

“Mentions of medications for it”,

“Don’t come to the practice if you have any covid symptoms such as anosmia”

Search term(case insensitive): Anosmia*

Evaluated Performance

Cohen's k = 83% (testing done on 100 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 83%

Recall (sensitivity / coverage) = 93%

Additional Notes

Run schedule– On demand

Other Specifications

Version 1.0, Last updated:xx

Anxiety

Return to contentsCRIS NLP Service

Brief Description

Application to extract and classify mentions related to (any kind of) anxiety.

Development Approach

Development approach: Rule-Based.

Classification of past or present symptom: Both.

Classes produced: Affirmed, Negated, and Irrelevant.

Output and Definitions

The output includes-

Examples of positive mentions:

“ZZZZZ shows anxiety problems”

Examples of negative / irrelevant mentions (not included in the output):

“ZZZZ does not show anxiety problems”

“If ZZZZ was anxious he would not take his medication”

Search Terms (case insensitive): Available on request

Evaluated Performance

Cohen’s k = 94% (testing done on 3000 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 87%

Recall (sensitivity / coverage) = 97%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Apathy

Return to contentsCRIS NLP Service

Brief Description

Application to extract the presence of apathy

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“ continues to demonstrate apathy”

“ some degree of apathy noted”

Examples of negative / irrelevant mentions (not included in the output):

“denied apathy”

“no evidence of apathy”

“may develop apathy or as a possible side effect of medication”

“*apathy* found in quite a few names”

Search term(s): *apath*

Evaluated Performance

Cohen's k=86% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 93%

Recall (sensitivity / coverage) = 86%

Patient level testing done on all patients with primary diagnosis code F32* or F33* (testing done on 30 random documents):

Precision (specificity / accuracy) = 73%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Arousal

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of arousal excluding sexual arousal.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“...the decisions she makes when emotionally aroused”,

“...during hyperaroused state”,

“following an incidence of physiological arousal”

Examples of negative / irrelevant mentions (not included in the output):

“mentions of sexual arousal”,

“not aroused”,

“annotations include unclear statements and hypotheticals”

Search term(s): *arous*

Evaluated Performance

Cohen's k = 95% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 89%

Recall (sensitivity / coverage) = 91%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Bad Dreams

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of experiencing a bad dream

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“ZZZZZ had a bad dream last night”,

“she frequently has bad dreams”,

“ZZZZZ has suffered from bad dreams in the past”,

“ZZZZZ had a bad dream that she was underwater”,

“ he’s been having fewer bad dreams”

Examples of negative / irrelevant mentions (not included in the output):

“she denied any bad dreams”,

“does not suffer from bad dreams”,

“she said it might have been a bad dream”,

“he woke up in a start, as if waking from a bad dream”,

Search term(s): bad dream*

Evaluated Performance

Cohen's k = 100% (testing done on 100 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 89%

Recall (sensitivity / coverage) = 100%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Blunted Affect

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of blunted affect

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“his affect remains very blunted”,

“objectively flattened affect”,

“states that ZZZZZ continues to appear flat in affect”

Examples of negative / irrelevant mentions (not included in the output):

“incongruent affect”,

“stable affect”,

“typical symptoms include blunted affect”,

“slightly flat affect”,

Search term(s): *affect*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 100%

Recall (sensitivity / coverage) = 80%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Circumstantiality

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of circumstantiality

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“loose associations and circumstantiality”,

“circumstantial in nature”,

“some circumstantiality at points”,

“speech is less circumstantial”

Examples of negative / irrelevant mentions (not included in the output):

“no signs of circumstantiality”,

“no evidence of circumstantial”

“Such as a hypothetical cause of something else”

Search term(s): *circumstan*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 94%

Recall (sensitivity / coverage) = 92%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Cognitive Impairment

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of cognitive impairment. The application allows to detect cognitive impairments related to attention, memory, executive functions, and emotion, as well as a generic cognition domain. This application has been developed for patients diagnosed with schizophrenia.

Development Approach

Development approach: Rule-Based.

Classification of past or present symptom: Both.

Classes produced: Affirmed and relating to the patient and Negated/Irrelevant.

Output and Definitions

The output includes-

Examples of positive mentions:

“patient shows attention problems (positive) “

“ZZZ does not show good concentration “

“ZZZ shows poor concentration “

“patient scored 10/18 for attention “

“ZZZ seems hyper-vigilant “

Examples of negative / irrelevant mentions (not included in the output):

“patient uses distraction technique to ignore hallucinations “

“attention seeking”

“patient needs (medical) attention”

“draw your attention to…”

Search term(s): attention, concentration, focus, distracted, adhd, hypervigilance, attend to

Evaluated Performance

Cohen’s k:

Cognition – 66% (testing done on 3000 random documents)

Emotion – 84% (testing done on 3000 random documents)

Executive function – 40% (testing done on 3000 random documents)

Memory – 68% (testing done on 3000 random documents)

Attention – 99% (testing done on 2616 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 96%

Recall (sensitivity / coverage) = 92%

Patient level testing done on all patients with an F20 diagnosis (testing done on 100 random documents):

Precision (specificity / accuracy) = 78%

Recall (sensitivity / coverage) = 70%

Additional Notes

Run schedule – On request

Other Specifications

Version 1.0, Last updated:xx

DOI

Concrete Thinking

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of concrete thinking.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“text referring to ‘concrete thinking’”,

“speech or answers to questions being ‘concrete’”,

“the patient being described as ‘concrete’ without elaboration”,

“answers being described as concrete in cognitive assessments”,

“‘understanding’ or ‘manner’ or ‘interpretations’ of circumstances being described as concrete”

Examples of negative / irrelevant mentions (not included in the output):

“no evidence of concrete thinking”

“reference to concrete as a material (concrete floor, concrete house etc.)”

“no concrete plans”,

“delusions being concrete”

Search term(s): Concret*, "concret* think*"

Evaluated Performance

Cohen's k = 83% (testing done on 50 random documents)

Instance level (testing done on 146 random documents):

Precision (specificity / accuracy) = 84%

Recall (sensitivity / coverage) = 41%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Delusions

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of delusions

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“paranoid delusions”,

“continued to express delusional ideas of the nature”

“no longer delusional- indicates past”

Examples of negative / irrelevant mentions (not included in the output):

“no delusions”,

“denied delusions”

“delusions are common”

Search term(s): *delusion*

Evaluated Performance

Cohen's k = 92% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 93%

Recall (sensitivity / coverage) = 85%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Derailment

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of derailment.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Very agitated at present, he was agitated”,

“He was initially calm but then became agitated and started staring and pointing at me towards”,

“Should also

“he derailed frequently”,

“there was evidence of flight of ideas”,

“thought derailment in his language” ‘speech no longer derailed’.

Examples of negative / irrelevant mentions (not included in the output):

“no derailment”,

“erratic compliance can further derail her stability”

“train was derailed”

Search term(s): *derail*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 88%

Recall (sensitivity / coverage) = 95%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Disturbed Sleep

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of disturbed sleep.

Development Approach

Development approach: Rule-Based.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“complains of poor sleep”,

“sleep disturbed”,

“sleep difficulty”,

“sleeping poorly”

Search term(s): "disturbed sleep*", "difficult* sleep*", "poor sleep*"

Evaluated Performance

Cohen's k = 75% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 88%

Recall (sensitivity / coverage) = 68%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 2.0, Last updated:xx

Diurnal Variation

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of diurnal variation of mood

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

"patient complaints of diurnal variation"

"he reported diurnal variation in his mood"

"Diurnal variation present"

"some diurnal variation of mood present"

Examples of negative / irrelevant mentions (not included in the output):

"no diurnal variation"

"diurnal variation absent"

"diurnal variation could be a symptom of more severe depression"

"we spoke about possible diurnal variation in his mood"

Search term(s): diurnal variation

Evaluated Performance

Cohen's k = xx

Instance level (testing done on 100 Random Documents):

Precision (specificity / accuracy) = 94%

Recall (sensitivity / coverage) = 100%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Drowsiness

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of drowsiness

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“ZZZZZ appeared to be drowsy”,

“She has complained of feeling drowsy”

Examples of negative / irrelevant mentions (not included in the output):

“He is not drowsy in the mornings”,

“She was quite happy and did not appear drowsy”,

“In reading the label (of the medication), ZZZZZ expressed concern in the indication that it might make him drowsy”,

“Monitor for increased drowsiness and inform for change in presentation”,

Search term(s): drows*

Evaluated Performance

Cohen's k = 83% (testing done on 1000 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 80%

Recall (sensitivity / coverage) =100%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Early Morning wakening

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of early morning wakening.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“ patient complaints of early morning awakening”,

“he reported early morning wakening”,

“Early morning awakening present”,

“there is still some early morning wakening”

Examples of negative / irrelevant mentions (not included in the output):

“no early morning wakening”,

“early morning wakening absent”,

“early morning awakening could be a symptom of more severe depression”,

“we spoke about how to deal possible early morning wakening”

Search term(s): early morning wakening

Evaluated Performance

Cohen's k = xx

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 95%

Recall (sensitivity / coverage) = 96%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Echolalia

Return to contentsCRIS NLP Service

Brief Description

Application to extract occurrences where echolalia is present.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“no neologisms, but repeated what I said almost like echolalia”,

“intermittent echolalia”,

“some or less echolalia”

Examples of negative / irrelevant mentions (not included in the output):

“no echolalia”,

“no evidence of echolalia”,

“Echolalia is not a common symptom”,

“Include hypotheticals such as he may have some echolalia, evidence of possible echolalia”

Search term(s): *echola*

Evaluated Performance

Cohen's k = 88% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 89%

Recall (sensitivity / coverage) = 86%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Elation

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of elation.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“mildly elated in mood”,

“elated in mood on return from leave”,

“she appeared elated and aroused”

Examples of negative / irrelevant mentions (not included in the output):

“ZZZZZ was coherent and more optimistic/aspirational than elated throughout the conversation”,

“no elated behaviour" etc.

“In his elated state there is a risk of accidental harm”,

“monitor for elation”,

Search term(s): *elat*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 94%

Recall (sensitivity / coverage) = 97%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Emotional Withdrawal

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of emotional withdrawal

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

To any description of the patient being described as withdrawn or showing withdrawal but with the following exceptions (which are annotated as unknown):

• Alcohol, substance, medication withdrawal

• Withdrawal symptoms, fits, seizures etc.

• Social withdrawal (i.e. a patient described as becoming withdrawn would be positive but a patient described as showing ‘social withdrawal’ would be unknown – because social withdrawal is covered in another application).

• Thought withdrawal (e.g. ‘no thought insertion, withdrawal or broadcast’)

• Withdrawing money, benefits being withdrawn etc.

Examples of negative / irrelevant mentions (not included in the output):

Restricted to instances where the patient is being described as not withdrawn and categorised as unknown.

Search term(s): withdrawn

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 85%

Recall (sensitivity / coverage) = 96%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Eye Contact (Catergorisation)

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of eye contact and determine the type of eye contact.

Development Approach

Development approach: Rule-Based

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

the application successfully identifies the type of eye contact (as denoted by the keyword) in the context (as denoted by the contextstring)

e.g., keyword: ‘good’; contextstring: ‘There was good eye contact’

Negative mentions: the application does not successfully identifies the type of contact (as denoted by the keyword) in the context (as denoted by the contextstring). The keyword does not related to the eye contact

e.g., keyword: ‘showed’; contextstring: ‘showed little eye-contact’.

Keyword: the term describing the type of eye contact

ContextString: the context containing the keyword in its relation to eye-contact

Search term(s): Eye *contact*

Evaluated Performance

Cohen's k = xx

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 91%

Recall (sensitivity / coverage) = 80%

Additional Notes

Run schedule – On request

Other Specifications

Version 1.0, Last updated:xx

Fatigue

Return to contentsCRIS NLP Service

Brief Description

Application to identify symptoms of fatigue.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“ZZZZ has been experiencing fatigue”,

“fatigue interfering with daily activities”

Examples of negative / irrelevant mentions (not included in the output):

“No mentions of fatigue”,

“her high levels of anxiety impact on fatigue”,

“main symptoms of dissociation leading to fatigue”

“ZZZZ is undertaking CBT for fatigue”.

Search term(s): Fatigue, exclude ‘chronic fatigue syndrome’.

Evaluated Performance

Cohen's k = xx

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 78%

Recall (sensitivity / coverage) = 95%

Additional Notes

Run schedule – Monthly

Other Specifications

Version: xx, Last updated:xx

Flight of Ideas

Return to contentsCRIS NLP Service

Brief Description

Application to extract instances of flight of ideas.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Mrs ZZZZZ was very elated with by marked flights of ideas”,

“marked pressure of speech associated with flights of ideas”,

“Some flight of ideas”.

Examples of negative / irrelevant mentions (not included in the output):

“no evidence of flight of ideas”,

“no flight of ideas”

“bordering on flight of ideas”

“relative shows FOI”

Search term(s):*flight* *of* *idea*

Evaluated Performance

Cohen's k = 96% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 91%

Recall (sensitivity / coverage) = 94%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Fluctuation

Return to contentsCRIS NLP Service

Brief Description

The purpose of this application is to determine if a mention of fluctuation within the text is relevant

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Mrs ZZZZZ’s mood has been fluctuating a lot”,

“suicidal thoughts appear to fluctuate”

Examples of negative / irrelevant mentions (not included in the output):

“no evidence of mood fluctuation”,

“does not appear to have significant fluctuations in mental state”

“unsure whether fluctuation has a mood component”,

“monitoring to see if fluctuations deteriorate”

Evaluated Performance

Cohen's k = xx

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 87%

Recall (sensitivity / coverage) = 96%

Additional Notes

Run schedule – On request

Other Specifications

Version 1.0, Last updated:xx

DOI

Formal Thoughts Disorder

Return to contentsCRIS NLP Service

Brief Description

Application to extract occurrences where formal thought disorder is present.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“deteriorating into a more thought disordered state with outbursts of aggression”,

“there was always a degree thought disorder”,

“Include some formal thought disorder”

Examples of negative / irrelevant mentions (not included in the output):

“no signs of FTD”,

“NFTD”

“?FTD”,

“relative shows FTD”,

Search term(s): *ftd*,*formal* *thought* *disorder*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 83%

Recall (sensitivity / coverage) = 83%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Grandiosity

Return to contentsCRIS NLP Service

Brief Description

Application to extract occurrences where grandiosity is apparent.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“ZZZZZ was wearing slippers and was animated elated and grandiose”,

“reduction in grandiosity”,

”No longer grandiose”

Examples of negative / irrelevant mentions (not included in the output):

“No evidence of grandiose of delusions in the content of his speech”,

“no evidence of grandiose ideas”

“his experience could lead to grandiose ideas”

Search term(s): *grandios*

Evaluated Performance

Cohen's k = 89% (testing done on 50 random documents).

Instance level: (testing done on 100 random documents):

Precision (specificity / accuracy) = 95%

Recall (sensitivity / coverage) = 91%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Guilt

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of guilt.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“she then feels guilty/angry towards mum”,

“Being angry is easier to deal with than feeling guilty”,

“Include feelings of guilt with a reasonable cause and mentions stating”,

“no longer feels guilty”

Examples of negative / irrelevant mentions (not included in the output):

“No feeling of guilt”,

“denies feeling hopeless or guilty”

“he might be feeling guilty”,

“some guilt”,

“sometimes feeling guilty”

Search term(s): *guil*

Evaluated Performance

Cohen's k = 92% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 83%

Recall (sensitivity / coverage) = 83%

Patient level testing done on all patients with primary diagnosis code of F32* and F33* (testing done on 90 random documents):

Precision (specificity / accuracy) = 93%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Hallucinations (All)

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of hallucinations.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“her husband was minimising her hallucinations”,

“continues to experience auditory hallucinations”,

“doesn’t appear distressed by his hallucinations”,

“he reported auditory and visual hallucinations”,

“this will likely worsen her hallucinations”,

“his hallucinations subsided”,

“Neuroleptics were prescribed for her hallucinations”.

Examples of negative / irrelevant mentions (not included in the output):

“denied any hallucinations”,

“no evidence of auditory hallucinations”,

“pseudo(-) hallucinations”,

“hallucinations present?”,

Search term(s): hallucinat*

Evaluated Performance

Cohen's k = 83% (testing done on 100 random documents).

Instance level (testing done on 100 dandom documents):

Precision (specificity / accuracy) = 84%

Recall (sensitivity / coverage) = 98%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 2.0, Last updated:xx

DOI

Hallucinations - Auditory

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of auditory hallucinations non-specific to diagnosis.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Seems to be having olfactory hallucination”,

“in relation to her tactile hallucinations”

Examples of negative / irrelevant mentions (not included in the output):

“denies auditory, visual, gustatory, olfactory and tactile hallucinations at the time of the assessment”,

“denied tactile/olfactory hallucination”

“possibly olfactory hallucinations”

Search term(s): auditory hallucinat*

Evaluated Performance

Cohen's k = 96% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 80%

Recall (sensitivity / coverage) = 84%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Hallucinations - Olfactory Tactile Gustatory (OTG)

Return to contentsCRIS NLP Service

Brief Description

Application to extract occurrences where auditory hallucination is present. Auditory hallucinations may be due to a diagnosis of psychosis/schizophrenia or may be due to other causes, e.g. due to substance abuse.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“seems to be having olfactory hallucinations”,

“in relation to her tactile hallucinations”

Examples of negative / irrelevant mentions (not included in the output):

“denies auditory, visual, gustatory, olfactory and tactile hallucinations at the time of the assessment”,

“denied tactile/olfactory hallucinations”

“possibly olfactory hallucinations”

Search term(s): *olfactory*, *hallucin*, *gustat* , *tactile*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 78%

Recall (sensitivity / coverage) = 68%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Hallucinations - Visual

Return to contentsCRIS NLP Service

Brief Description

Application to extract occurrences where visual hallucination is present. Visual hallucinations may be due to a diagnosis of psychosis/schizophrenia or may be due to other causes, e.g. due to substance abuse.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of negative / irrelevant mentions (not included in the output):

“denied any visual hallucination”,

“not responding to visual hallucination”,

“no visual hallucination”,

“if/may/possible/possibly/might have visual hallucinations”,

“monitor for possible visual hallucination”

Search term(s): visual hallucinat*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 91%

Recall (sensitivity / coverage) = 96%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Helplessness

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of helplessness.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Ideas of helplessness secondary to her physical symptoms present”,

“ideation compounded by anxiety and a sense of helplessness”

Examples of negative / irrelevant mentions (not included in the output):

“denies uselessness or helplessness”,

“no thoughts of hopelessness or helplessness”.

“there a sense of helplessness”,

“helplessness is a common symptom”

Search term(s): *helpless*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 93%

Recall (sensitivity / coverage) = 86%

Patient level testing done on all patients with primary diagnosis of F32* or F33* (testing done on 30 random documents):

Precision (specificity / accuracy) = 90%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Hopelessness

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of hopelessness

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“feeling very low and hopeless”,

“says feels hopeless”

Examples of negative / irrelevant mentions (not included in the output):

“denies hopelessness”,

“no thoughts of hopelessness or helplessness”

“there a sense of hopelessness”,

“hopelessness is a common symptom”

Search term(s):*hopeles*

Evaluated Performance

Cohen's k = 90% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 90%

Recall (sensitivity / coverage) = 95%

Patient level testing done on all patients with a primary diagnosis of F32* or F33*:

Precision (specificity / accuracy) = 87%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Hostility

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of hostility.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“increased hostility and paranoia”,

“she presented as hostile to the nurses”

Examples of negative / irrelevant mentions (not included in the output):

“not hostile”,

“denied any feelings of hostility”

“he may become hostile”,

“hostility is something to look out for”

Search term(s): *hostil*

Evaluated Performance

Cohen's k = 94% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 89%

Recall (sensitivity / coverage) = 94%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Insomnia

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of insomnia.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“initial insomnia”,

“contributes to her insomnia”,

“problems with insomnia”,

“this has resulted in insomnia”,

“this will address his insomnia”

Examples of negative / irrelevant mentions (not included in the output):

“no insomnia”,

“not insomniac"

“Typical symptoms include insomnia”,

“might have insomnia”,

Search term(s): *insom*

Evaluated Performance

Cohen's k = 94% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 89%

Recall (sensitivity / coverage) = 94%

Patient level testing done on all patients with primary diagnosis of F32* or F33* (testing done on 50 random documents):

Precision (specificity / accuracy) = 94%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Irritability

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of irritability.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“can be irritable”,

“became irritable”,

“appeared irritable”,

“complained of feeling irritable”

Examples of negative / irrelevant mentions (not included in the output):

“no evidence of irritability”,

“no longer irritable”,

“irritable bowel syndrome”,

“becomes irritable when unwell”,

Search term(s): *irritabl*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 100%

Recall (sensitivity / coverage) = 83%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Loss of Coherence

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of incoherence or loss of coherence in speech or thinking.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“patient was incoherent”,

“his speech is characterised by a loss of coherence”

Examples of negative / irrelevant mentions (not included in the output):

“patient is coherent”,

“coherence in his thinking”

“coherent discharge plan”,

“could not give me a coherent account”,

Search term(s): coheren*, incoheren*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 98%

Recall (sensitivity / coverage) = 95%

Patient level testing done on all patients with primary diagnosis code F32* or F33* (testing done on 50 random documents):

Precision (specificity / accuracy) = 93%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Low energy

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of low energy.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“low energy”,

“decreased energy”,

“not much energy”,

“no energy”

Examples of negative / irrelevant mentions (not included in the output):

“no indications of low energy”,

“increased energy”

“..., might be caused by low energy”,

“monitor for low energy”,

Search term(s): *energy*

Evaluated Performance

Cohen's k = 95% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 82%

Recall (sensitivity / coverage) = 85%

Patient level testing done on all patients with primary diagnosis code F32* or F33* (testing done on 50 random documents).

Precision (specificity / accuracy) = 76%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Mood instability

Return to contentsCRIS NLP Service

Brief Description

This application identifies instances of mood instability.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“she continues to have frequent mood swings”,

“expressed fluctuating mood”

Examples of negative / irrelevant mentions (not included in the output):

“no mood fluctuation”

“no mood unpredictability”,

“denied diurnal mood variations”

“she had harmed others in the past when her mood changed”,

“tried antidepressants in the past but they led to fluctuations in mood”,

Search term(s): "*mood*" and "chang*", "extremes", "fluctuat*", "instability", "*labil*", "*swings*", "*unpredictable*", "unsettled", "unstable", "variable", "*variation*", or "volatile".

Evaluated Performance

Cohen's k = 91% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 100%

Recall (sensitivity / coverage) = 70%#

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Mutism

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of mutism.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“she has periods of 'mutism”,

“he did not respond any further and remained mute”

Examples of negative / irrelevant mentions (not included in the output):

“her mother is mute”,

“muted body language”

Search term(s): *mute* , *mutism*

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 91%

Recall (sensitivity / coverage) = 75%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Negative Symptoms

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of negative symptoms.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“she was having negative symptoms”,

“diagnosis of schizophrenia with prominent negative symptoms”

Examples of negative / irrelevant mentions (not included in the output):

“no negative symptom”,

“no evidence of negative symptoms”

“symptoms present?”,

“negative symptoms can be debilitating” Definitions:

Search term(s): *negative* *symptom*

Evaluated Performance

Cohen's k = 85% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 86%

Recall (sensitivity / coverage) = 95%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Nightmares

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of nightmares.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“she was having nightmares”,

“unsettled sleep with vivid nightmares”

Examples of negative / irrelevant mentions (not included in the output):

“no nightmares”,

“no complains of having nightmares”

“it’s been a nightmare to get this arranged”,

“a nightmare scenario would be….”

Search term(s): nightmare*

Evaluated Performance

Cohen's k = 95% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 89%

Recall (sensitivity / coverage) = 100%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Obsessive Compulsive Symptoms

Return to contentsCRIS NLP Service

Brief Description

Application to identify obsessive-compulsive symptoms (OCS) in patients with schizophrenia, schizoaffective disorder or bipolar disorder

Development Approach

Development approach: Rule-Based.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

• Text states that patient has OCD features/symptoms

• Text states that patient has OCS

• Text including hoarding, which is considered part of OCS, regardless of presence or absence of specific examples

• Text states that patient has either obsessive or compulsive or rituals or Yale-Brown Obsessive Compulsive Scale (YBOCS) [see keywords below] and one of the following:

o Obsessions or compulsions are described as egodystonic

o Intrusive, cause patient distress or excessive worrying/anxiety

o Patient feels unable to stop obsessions or compulsions

o Patient recognises symptoms are irrational or senseless

• Clinician provides specific YBOCS symptoms

• Text reports that patient has been diagnosed with OCD by clinician

Negative annotations of OCS include

• Text makes no mention of OCS

• Text states that patient does not have OCS • Text states that patient has either compulsions or obsessions, not both, and there is no information about any of the following:

o Patient distress

o Obsessive or compulsive symptoms described as egodystonic

o Inability to stop obsessions or compulsions

o Description of specific compulsions or specific obsessions

o Patient insight

• Text states that non-clinician observers (e.g., patient or family/friends) believe patient has obsessions or compulsions without describing YBOCS symptoms.

• Text includes hedge words (i.e., possibly, apparently, seems) that specifically refers to OCS keywords

• Text includes risky, risk-taking or self-harming behaviours

• Text includes romantic or weight-related (food-related) words that modify OCS keywords

Evaluated Performance

Cohen's k = 80% (testing done on 600 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 72%

Additional Notes

Run schedule – On request

Other Specifications

Version 1.0, Last updated:xx

Paranoia

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of paranoia. Paranoia may be due to a diagnosis of paranoid schizophrenia or may be due to other causes, e.g. substance abuse.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“vague paranoid ideation”,

“caused him to feel paranoid”

Examples of negative / irrelevant mentions (not included in the output):

“denied any paranoia”,

“no paranoid feelings”

“relative is paranoid about me”,

“paranoia can cause distress”

Search term(s): *paranoi*

Evaluated Performance

Cohen's k = 92% (testing done on 100 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 86%

Recall (sensitivity / coverage) = 94%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Passivity

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of passivity.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“patient describes experiencing passivity”,

“patient has experienced passivity in the past but not on current admission”

Examples of negative / irrelevant mentions (not included in the output):

"denies passivity",

"no passivity".

“passivity could not be discussed”,

“possible passivity requiring further exploration”,

“unclear whether this is passivity or another symptom”

Search term(s):passivity

Evaluated Performance

Cohen's k = 83% (testing done on 438 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 89%

Recall (sensitivity / coverage) = 100%

Additional Notes

Run schedule – On request

Other Specifications

Version 1.0, Last updated:xx

Persecutory Ideation

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of ideas of persecution.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“she was having delusions of persecution”,

“she suffered persecutory delusions”,

“marked persecutory delusions”,

“paranoid persecutory ideations”,

“persecutory ideas present”

Examples of negative / irrelevant mentions (not included in the output):

“denies persecutory delusions”,

“he denied any worries of persecution”,

“this might not be a persecutory belief”,

“no longer experiencing persecutory delusions”

Search term(s): [Pp]ersecu*

Evaluated Performance

Cohen's k = 91% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 80%

Recall (sensitivity / coverage) = 96%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Poor Appetite

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of poor appetite (negative annotations).

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes- Positive annotations( applied to adjectives implying a good or normal appetite):

“Appetite fine”

“Appetite and sleep OK”,

“Appetite reasonable”,

“appetite alright”,

“sleep and appetite both preserved”

Exclude Negative annotations:

“loss of appetite”,

“reduced appetite”,s

“decrease in appetite”,

“not so good appetite”,

“diminished appetite”,

“lack of appetite”

Exclude Unknown annotations:

“Loss of appetite as a potential side effect”,

“as an early warning sign, as a description of a diagnosis (rather than patient experience)”, “describing a relative rather than the patient, ‘appetite suppressants’” Definitions: Search term(s): *appetite* within the same sentence of *eat* *well*, *alright*, excellent*, fine*, fair*, good*, healthy, intact*, not too bad*, no problem, not a concern*.

Evaluated Performance

Cohen’s k = 91% (Done on 50 random documents). Instance level, Random sample of 100 random documents: Precision (specificity / accuracy) = 83%

Recall (sensitivity / coverage) = 71% Patient level – All patients with primary diagnosis code F32* or F33* in a structured field, random sample of 30 (one document per patient), Precision (specificity / accuracy) = 97%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Poor Concentration

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of poor concentration.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“my concentration is still poor”,

“she found it difficult to concentrate”,

“he finds it hard to concentrate”

Examples of negative / irrelevant mentions (not included in the output):

“good attention and concentration”,

“participating well and able to concentrate on activities”

“‘gave her a concentration solution”,

“talk concentrated on her difficulties”,

Search term(s): *concentrat*

Evaluated Performance

Cohen's k = 95% (testing done on 100 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 84%

Recall (sensitivity / coverage) = 60%

Patient level testing done on all patients with primary diagnosis code F32* or F33* (testing done on 50 random documents):

Precision (specificity / accuracy) = 76%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Poor Eye Contact

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of poor eye contact.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“looked unkempt, quiet voice, poor eye contact”,

“eye contact was poo”,

“she refused eye contact”,

“throughout the conversation she failed to maintain eye contact”,

“unable to engage in eye contact”,

“eye contact was very limited”,

“no eye contact and constantly looking at floor”

Examples of negative / irrelevant mentions (not included in the output):

“good eye contact”,

“he was comfortable with eye contact”,

“she showed increased eye contact”,

“I noticed reduced eye contact today”

Search term(s): Available on request

Evaluated Performance

Cohen’s k = 92% (testing done on 100 random documents).

Patient level testing done on all patients (testing done on 100 Random Documents):

Precision (specificity / accuracy) = 81%

Recall (sensitivity / coverage) = 65%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

Poor Insight

Return to contentsCRIS NLP Service

Brief Description

Applications to identify instances of poor insight.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“Lacking/ Lack of insight”

“Doesn’t have insight”

“No/ None insight”

“Poor insight”

“Limited insight”

“Insightless”

“Little insight”

Examples of negative / irrelevant mentions (not included in the output):

“Clear insight”

“Had/ Has insight”

“There is a lengthy and unclear description of the patient’s insight, without a final, specific verdict”

“Insight was not assessed”

Search term(s): insight

Evaluated Performance

Cohen’s k = 88% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 87%

Recall (sensitivity / coverage) = 70%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Poor Motivation

Return to contentsCRIS NLP Service

Brief Description

This application aims to identify instances of poor motivation.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“poor motivation”,

“unable to motivate’ self”,

“difficult to motivate’ self”,

“struggling with motivation”

Examples of negative / irrelevant mentions (not included in the output):

“patient has good general motivation”,

“participate in alcohol rehabilitation”,

“tasks/groups designed for motivation”,

“comments about motivation but not clearly indicating whether this was high or low”,

Search term(s): "Motivat*" and "lack*", "poor", "struggle*", or "no"

Evaluated Performance

Cohen's k = 88% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 85%

Recall (sensitivity / coverage) = 45%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Poverty Of Speech

Return to contentsCRIS NLP Service

Brief Description

Application to identify poverty of speech.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“he continues to display negative symptoms including blunting of affect, poverty of speech”,

“he does have negative symptoms in the form of poverty of speech”

“less poverty of speech”

Examples of negative / irrelevant mentions (not included in the output):

“no poverty of speech”,

“poverty of speech not observed”

“poverty of speech is a common symptom of…, “

“?poverty of speech”

Search term(s): speech within the same sentence of poverty, impoverish.

Evaluated Performance

Cohen's k = 100% (testing done on 50 random documents).

Instance level (testing done on 100 random documents):

Precision (specificity / accuracy) = 87%

Recall (sensitivity / coverage) = 85%

Additional Notes

Run schedule – Monthly

Other Specifications

Version 1.0, Last updated:xx

DOI

Poverty Of Thought

Return to contentsCRIS NLP Service

Brief Description

Application to identify instances of poverty of thought.

Development Approach

Development approach: Machine-learning.

Classification of past or present symptom: Both.

Classes produced: Positive

Output and Definitions

The output includes-

Examples of positive mentions:

“poverty of thought was very striking”,

“evidence of poverty of thought”,

“some poverty of thought”

Examples of negative / irrelevant mentions (not included in the output):

“no poverty of thought”,

“no evidence of poverty of thought”

“poverty of thought needs to be assessed”,

“poverty of thought among other symptoms”

Search term(s): *poverty* *of* *thought*

Evaluated Performance